High-level frameworks for PyTorch

Several popular higher-level frameworks are built on top of PyTorch and make the code easier to write and run:

- ignite is developed by the PyTorch project. It is the easiest to combine with raw PyTorch, but not the easiest to use.

- PyTorch-lightning is probably the most popular. It makes some operations (e.g. using multiple GPUs) very easy, but some of the Lightning products are not free.

- fastai adds several interesting concepts to PyTorch borrowed from other languages, but debugging can be challenging.

- Catalyst

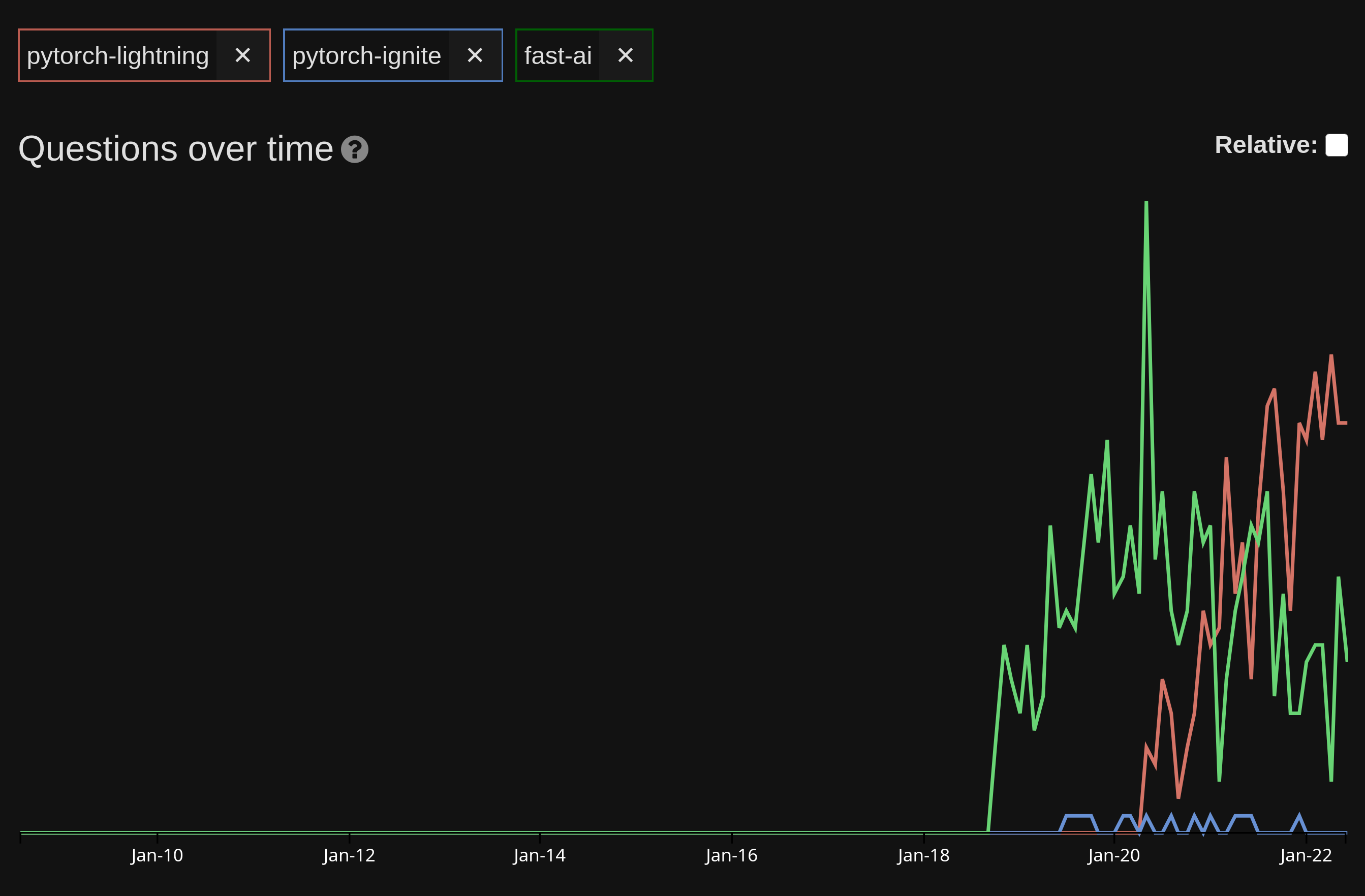

The following tag trends on Stack Overflow might give an idea of the popularity of these frameworks over time (catalyst doesn’t have any Stack Overflow tag):

If this data is to be believed, ignite never really took off (it also has a lower number of stars on GitHub), fast-ai was extremely popular when it came out, but its usage is going down, and PyTorch-lightning is currently the most popular.

Should you use one (and which one)?

Learning raw PyTorch is probably the best option for research. PyTorch is stable and here to stay. Higher-level frameworks may rise and drop in popularity and today’s popular one may see little usage tomorrow.

Raw PyTorch is also the most flexible, the closest to the actual computations happening in your model, and probably the easiest to debug.

Depending on your deep learning trajectory, you might find some of these tools useful though:

If you work in industry, you might want or need to get results quickly

Some operations (e.g. parallel execution on multiple GPUs) can be tricky in raw PyTorch, while being extremely streamlined when using e.g. PyTorch-lightning

Even in research, it might make sense to spend more time thinking about the structure of your model and the functioning of a network instead of getting bogged down in writing code

Before moving to any of these tools, it is probably a good idea to get a good knowledge of raw PyTorch: use these tools to simplify your workflow, not cloud your understanding of what your code is doing.