Experiment tracking with

Marie-Hélène Burle

November 25, 2025

Experiment tracking

Lots of moving parts …

Deep learning experiments come with a lot of components:

- Datasets

- Model architectures

- Hyperparameters

While developing an efficient model, various datasets will be trained on various architectures tuned with various hyperparameters

… making for challenging tracking

*hp = hyperparameter

How did we get performance19 again? 🤯

Experiment tracking tools

The solution to this complexity is to use an experiment tracking tool such as MLflow and, optionally, a data versioning tool such as DVC

MLflow

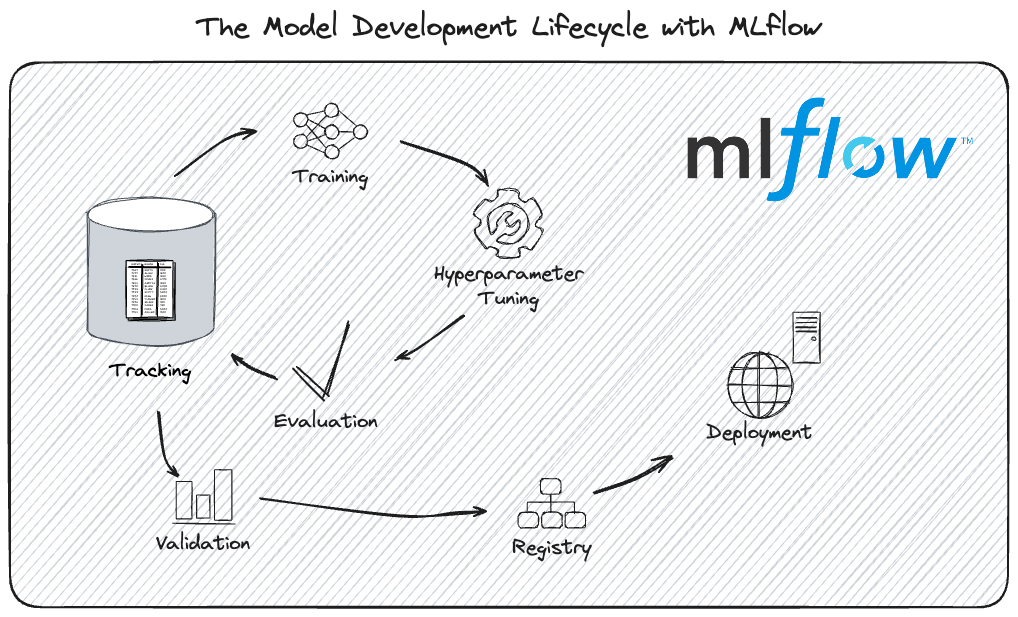

Platform for AI life cycle

FOSS & compatible

- Open-source

- Works with any ML or DL framework

- Vendor-neutral if you run a server on a commercial platform

- Can be combined with dvc for dataset versioning

- Works with any hyperparameter tuning framework ➔ e.g. integration with Optuna

integration with Ray Tune

integration with hyperopt

Used by many proprietary tools

The foundation of many proprietary no-code/low-code tuning platforms that just add a layer on top to interface with the user with text rather than code

e.g. Microsoft Fabric, FLAML

Limitations

MLflow projects do not (yet) support uv

Some functionality missing for deployment and production for large companies (but irrelevant for research and no FOSS option exists)

Definitions

Run: single execution of a model training event

Model signature: a formal description of a model’s input and output data structure, data types, and names of columns or features

Installing MLflow

With uv

Create a uv project:

Install MLflow:

Tracking models

Overview

Track models at checkpoints

Compare with different datasets

Visualize with tracking UI

MLflow tracking setups

MLflow tracking setups

MLflow tracking setups

MLflow tracking setups

![]()

Remote tracking server

For team development

Here we use PostgreSQL which works well to manage a database in a client-server system

(Requires installing the psycopg2 package)

Log tracking data

The workflow looks like this:

Organize runs

experiments child runs tags

Visualize logs

- Open

http://<host>:<port>in your browser

Example:

For a local server on port 5000, open http://127.0.0.1:5000

- Connect your running session to the server:

Example for a local server on port 5000:

Tracking datasets

Hyperparameter tuning

Goal of tuning

Find the optimal set of hyperparameters that maximize a model’s predictive accuracy and performance

➔ Find the right balance between high bias (underfitting) and high variance (overfitting) to improve the model’s ability to generalize and perform well on new, unseen data

Tuning frameworks

Hyperparameters optimization used to be done manually following a systematic grid pattern. This was extremely inefficient

Nowadays, there are many frameworks that do it automatically, faster, and better

Example:

Workflow

- Define an objective function

- Define a search space

- Minimize the objective over the space